Design overview and first steps

BeatMaster is a music RPG designed and programmed by myself with art and other assets from Miko-Koro and music by MidiGogblin . In it players will take on the role of a young DJ as she fights the demons that have begun to pour out of an astral tear in the local club. Players will explore dungeons based on different genres of music, fight monsters with rhythm-based combat, and explore the city to find allies and equipment.

I hope to have a playable demo of this game completed by the spring of 2021. This series of blog entries will be my free form devlog. It will contain both anecdotes of development as well as more in depths explanations of my design and code.

I have never written a devlog before so this may be scattershot at times, however I do intend to post regular updates as development progresses. This first entry will give a general overview of some of the systems I have begun to develop, and future posts will contain more in depth explorations of these systems and solutions.

Design Pillars

Before I started any work on this project I had a few core concepts I know I wanted to build a game around:

- Rhythm based combat

- RPG style stat development

- catchy original music

- Light hearted and surreal story

- Player dialogue choice

I have experimented with many of these concepts previously so I already had in mind how I would tackle the dialogue system and the RPG elements. I have also attempted to make a rhythm game in unity before as well so I was aware how challenging the musical elements would be. Because of this I started experimenting with the audio/rhythm elements first

How To Rhythm in Unity?

It is very important to me that the player is able to get into “the groove” of the combat, and I would like to see players bobbing their heads as they fight. The feeling of being in the groove is a such a core joyful feeling that musicians get to experience often and that is something I want the combat to express. However, because of the fickle nature of computer timing, making combat that relies on musical timing can be difficult. If the player is running even a single fps too fast or slow, over the course of the song the input timing can become offset enough to make the game unplayable and completely destroy the groove.

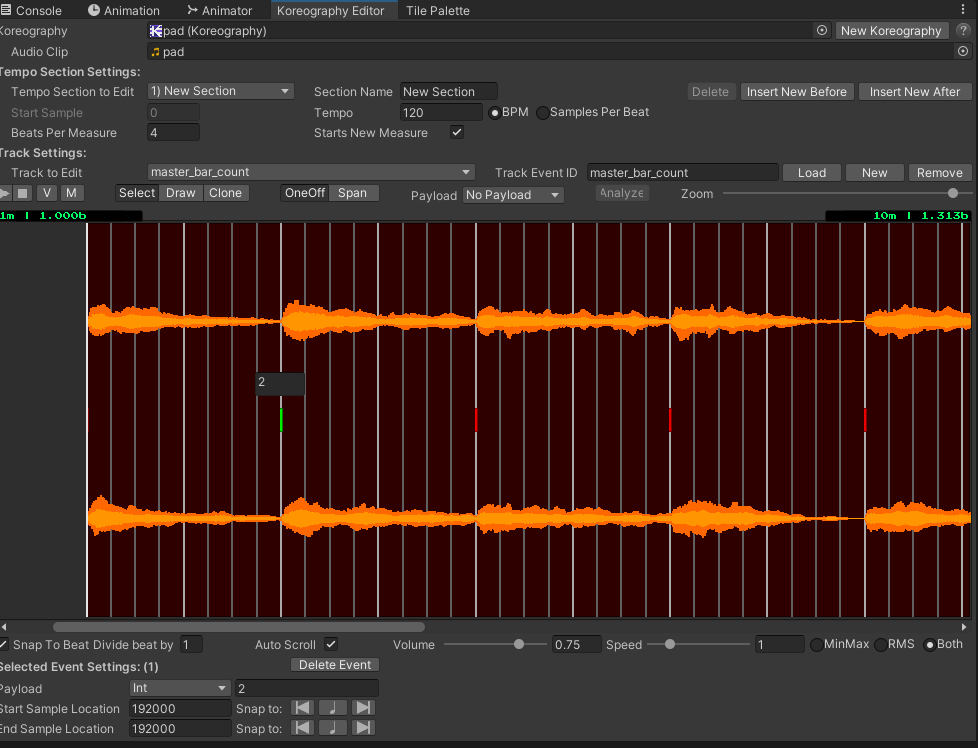

In earlier projects I have tackled this problem by syncing events to the time sample of a given audio clip, but I have never found an elegant way to make this scalable. I quickly decided to find a premade solution to save time on the audio-syncing and events. After some searching I decided Koreographer by SonicBloom was a good fit for my project. I got the base version as it seemed to have all the features I need.

Using Koreographer

Koreographer allows me to quickly add events with payloads to any audio clip. For example:

I have added an event on every bar of the master track with the bar count of the audio. I can then register any script to listen to this event easily:

void Start()

{

text = GetComponent<Text>();

//Registers the method onNewBar with the Koreographer

Koreographer.Instance.RegisterForEvents(

"master_bar_count", onNewBar);

}

//Called by my custom event

void onNewBar(KoreographyEvent evt){

int barNum = evt.GetIntValue();

text.text = "BAR: " + barNum;

}Powerful.

Unfortunately Koreographer does not supply an adequate solution to triggering and syncing multiple audio tracks at once. It provides a multiTrackPlayer however this requires all the audio tracks to be playing from the start. I will go more in depth on my solution to this later.

I had enough of an idea to start on art and combat.

Art Design

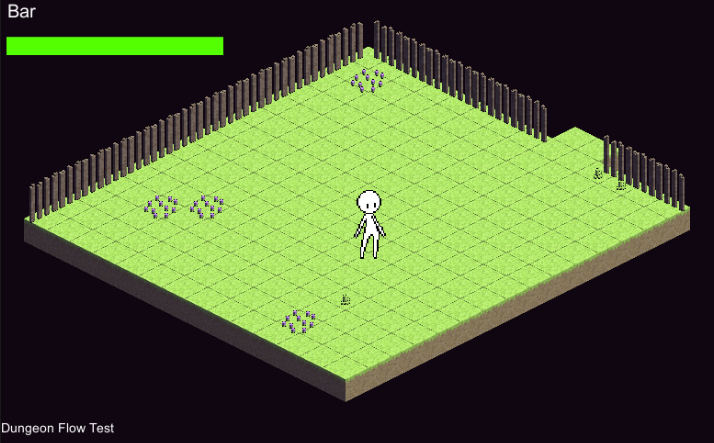

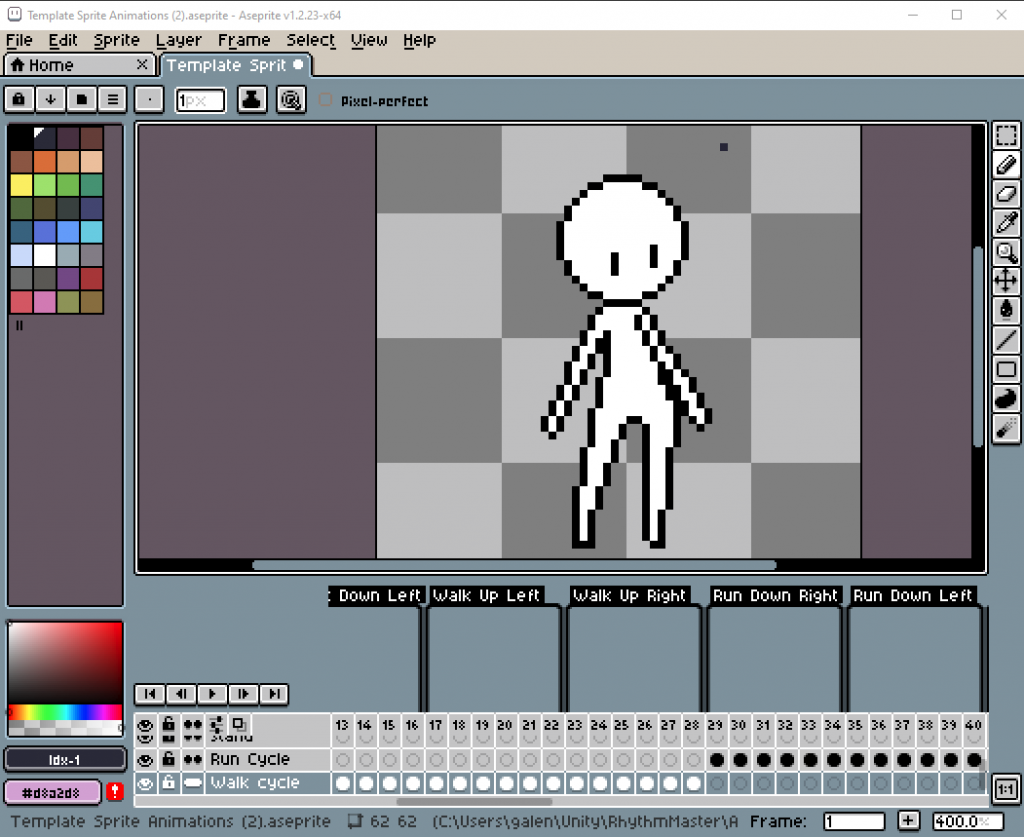

After discussing the project with Miko, we decided on a isometric 2d pixel art style with fairly large sprites (62×62 although this may get normalized to 64×64) which would allow for more detailed character design while still allowing for simple animations.

Miko also began working on some character design for the dialogue sprites as well as some environmental design. The story needs to be developed more before we are able to make much more progress on the characters.

We are going for a retrowave/occult mashup for the design of the game.

Rendering Animations

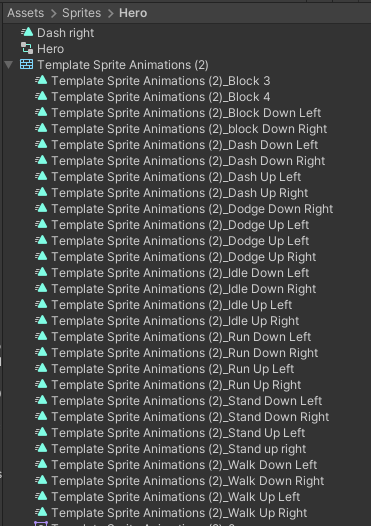

We are using Martin Hodler’s Aseprite Importer for Unity. Which is a great little tool. It’s only available through his github, but it’s very useful. It auto converts aseprite files into separate animations named after the tags in the aseprite files.

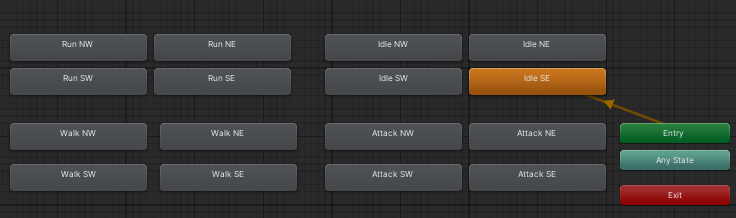

I then set each animation to a state in the Hero Animator

Which are accessed and set by the IsometricPlayerRenderer script

...

private void SetMovingDirection(int dir, float moveMagnitude)

{

bool isRunning = moveMagnitude > startRunSpeed;

switch (dir)

{

case 0:

if (isRunning) { animator.Play("Run NW"); }

else { animator.Play("Walk NW"); }

break;

case 1:

if (isRunning) { animator.Play("Run SW"); }

else { animator.Play("Walk SW"); }

break;

case 2:

if (isRunning) { animator.Play("Run SE"); }

else { animator.Play("Walk SE"); }

break;

case 3:

if (isRunning) { animator.Play("Run NE"); }

else { animator.Play("Walk NE"); }

break;

default:

// animator.Play("Idle SE");

SetIdleDirection(dir);

break;

}

}

...dir in this case is set by a method that splits a circle into a number of slices (in this case four) and returns the slice based on the Vector2 from the player input.

Speaking of player input….

Unity’s Input System

I decided to switch to Unity’s Input System from the defaults. I really like the design of the new system because it means I can reduce clutter in my Updates and it just feels more intuitive to me to use input callbacks.

ISSUES: I had initially built the project in Unity ver 2020.1.1f1 however after installing the Input System I was having issues with some of the callbacks not firing as expected. I had problems similar to a few other people posting on the Unity forums where no one had a very clear solution. However after updating to ver 2020.2 the problem seemed to resolve itself and the input system is working as expected. Phew.

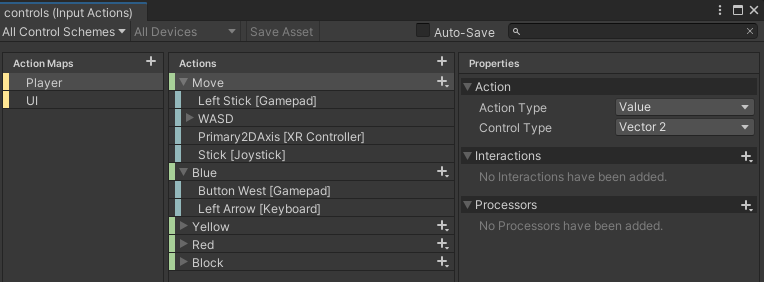

As instructed in the Input System Quick Start Guide I created an Input Actions Asset.

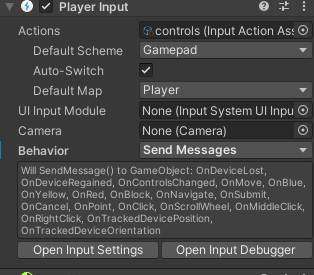

I then added the Player Input component to my Hero prefab, and set the Behavior to Send Messages which allows me to use callbacks named after the Input Actions

public void OnGreen()

{

Hero.active.playerStateMachine.InputGreen();

}

public void OnYellow()

{

Hero.active.playerStateMachine.InputYellow();

}

public void OnRed()

{

Hero.active.playerStateMachine.InputRed();

}

public void OnBlue(){

Hero.active.playerStateMachine.InputBlue();

}

//Callback from Player Move Input

public void OnMove(InputValue value){

Hero.active.playerStateMachine.InputStick(value);

} As you can see I am using a Finite State Machine to decide what to do with the inputs. So if the player is in CombatState the east button will attack an enemy, but if the player is in RoamState the east button will open the menu.

As I write this I can see some inconsistency with my naming pattern. While Blue Yellow Red etc… makes sense for the Xbox gamepad I am using it is not great for other controllers. I may refactor this to read East North West etc…

Combat

Designing the combat has taken most of my attention thus far. I will do a separate blog on the evolution of the combat system when I am more certain of what it will be like.

Right now, the enemies are each tied to a different stem in the music. For example:

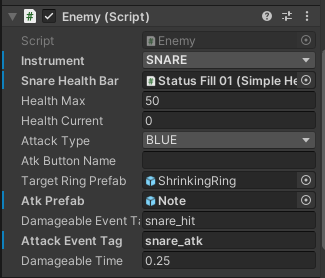

This enemy is connected to the SNARE hits of the song. It is targeted with the BLUE button. There are two separate Koreography events it listens for: snare_hit, which is when it is vulnerable, and snare_atk, which determines when it attacks. The Damageable Time is the window of error to be able to damage it.

When in combat the player uses the face buttons to attack different enemies. Each enemy is vulnerable on the various drum hits and beats of the master track. To damage the enemies the player must input the attacks in time with the song. The player also has the ability to block attacks.

I am still deciding if I want more of a turn based combat or something more like popular top-down action games like hades. I will go more in depth into this code once it is cleaner.

Next Steps…

Making games is hard, especially with such a small team. As I am still relatively novice, it is hard for me to have a super clear roadmap of what needs to happen, especially while we are still in a prototype phase. I know for sure that the combat needs to be fun and relatively simple. Most of my time will be focused on finalizing the combat system.

I will also be spending some time writing as well, as I think the story may inform the combat and gameplay in some places. I plan on writing some character breakdowns for the major characters. This will allow Miko to do more sprite work. I also hope to have a broad-strokes onesheet completed soon.

If you found any of these topics interesting I will be happy to elaborate more and share more code so please comment here or on my twitter.

Thanks for reading!